|

|

| Points of Contact: | Tom DeVore (540.568.6672) Isaiah Sumner (540.568.6670) |

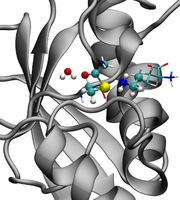

Computation has become an indispensable tool for the modern chemist. Simulations of chemical and biochemical processes routinely provide atomic-level detail that is difficult or impossible for traditional experimental techniques to achieve. Therefore, the JMU Department of Chemistry and Biochemistry operates two high performance computing clusters dedicated to computational chemistry: Faust and Cagliostro. An additional HPC system will to be delivered and installed in September 2023.

Faust is composed of eight, Dell R420 compute nodes and a Dell R720 head node. Each compute node has two, 1.9GHz six-core Intel Xeon processors and 48 GB of memory, whereas the head node has two, 2.2GHz eight-core Intel Xeon processors and 64 GB of memory. In total, Faust has 10TB of shared disk space for data storage. The cluster is parallelized with a 10 GbE interconnect and the HPL-Linpack benchmark clocked Faust running at 617 GFLOPS on all eight compute nodes.

Cagliostro is a hybrid GPU/CPU cluster from Exxact. It is composed of five compute nodes and one head node. Each compute node two, 2.4GHz six-core Intel Xeon CPUs, four NVidia GeForce GTX 980 GPUs and 32 GB of memory. The head node also has two, 2.4GHz six-core Intel Xeon CPUs and 32 GB of memory. In total Cagliostro has 10TB of shared disk space and the cluster is parallelized with a 10 GbE interconnect.

The third HPC system will have two AMD Epyc 7763 CPUs (128 total cores at 2.45GHz), four NVIDIA A100 (80GB each) GPUs, 1TB RAM, 7TB NVM storage, 25 GbE interconnect.

The College of Science and Mathemtics also built a computing cluster in 2023, which is located in the Frye Building. It is currenty being beta tested with the hopes to have in production in 2024.

Software running on these cluster includes Gaussian09, AmberMD, CP2K, CFour, GaussView5 and VMD enabling calculations for electronic structure, molecular dynamics, hybrid quantum mechanics/molecular mechanics (QM/MM) and molecular visualization manipulation and analysis.