On This Page...

The final steps of the Assessment Cycle involve reporting assessment results and, most importantly, using those results to make programmatic changes to improve student learning. During these steps of the process, you will need to consider questions such as:

- How can assessment results be communicated in a way that is clear, concise, compelling, and useful?

- If assessment results are positive, how should they be disseminated and used?

- If assessment results are negative, how should they be disseminated and used?

- Once a programmatic change has been made based on assessment results, how do we know if this change was an improvement?

Reporting Results

When communicating assessment results, the primary goal should always be to encourage action. Along these lines, results have the best chance of being used when they 1) tell a meaningful story, 2) are clear, concise, and compelling, and 3) adequately address reasonable critiques. The general guidelines below were adapted from Linda Suskie's book, Assessing Student Learning (2010). To learn more about these and other considerations for reporting assessment results, read the chapter entitled "Sharing Assessment Results with Internal and External Audiences."

Tell a Meaningful Story

-

- Tailor assessment results to your audience (understand their needs, perspectives, and priorities)

- Highlight interesting and unanticipated findings

- Emphasize meaningful differences

- Focus on matters your audience can do something about

- Provide context for your results

- Offer informed commentary

Be Clear, Concise, and Compelling

-

- Avoid jargon

- Use numbers sparingly

- Use data visualization techniques when appropriate to clearly communicate clearly and compellingly

Prepare for Critics

-

- Provide corroborating information (triangulation)

- Document the quality of your assessment strategy

- Acknowledge possible flaws in your assessment strategy

.

Data Visualization

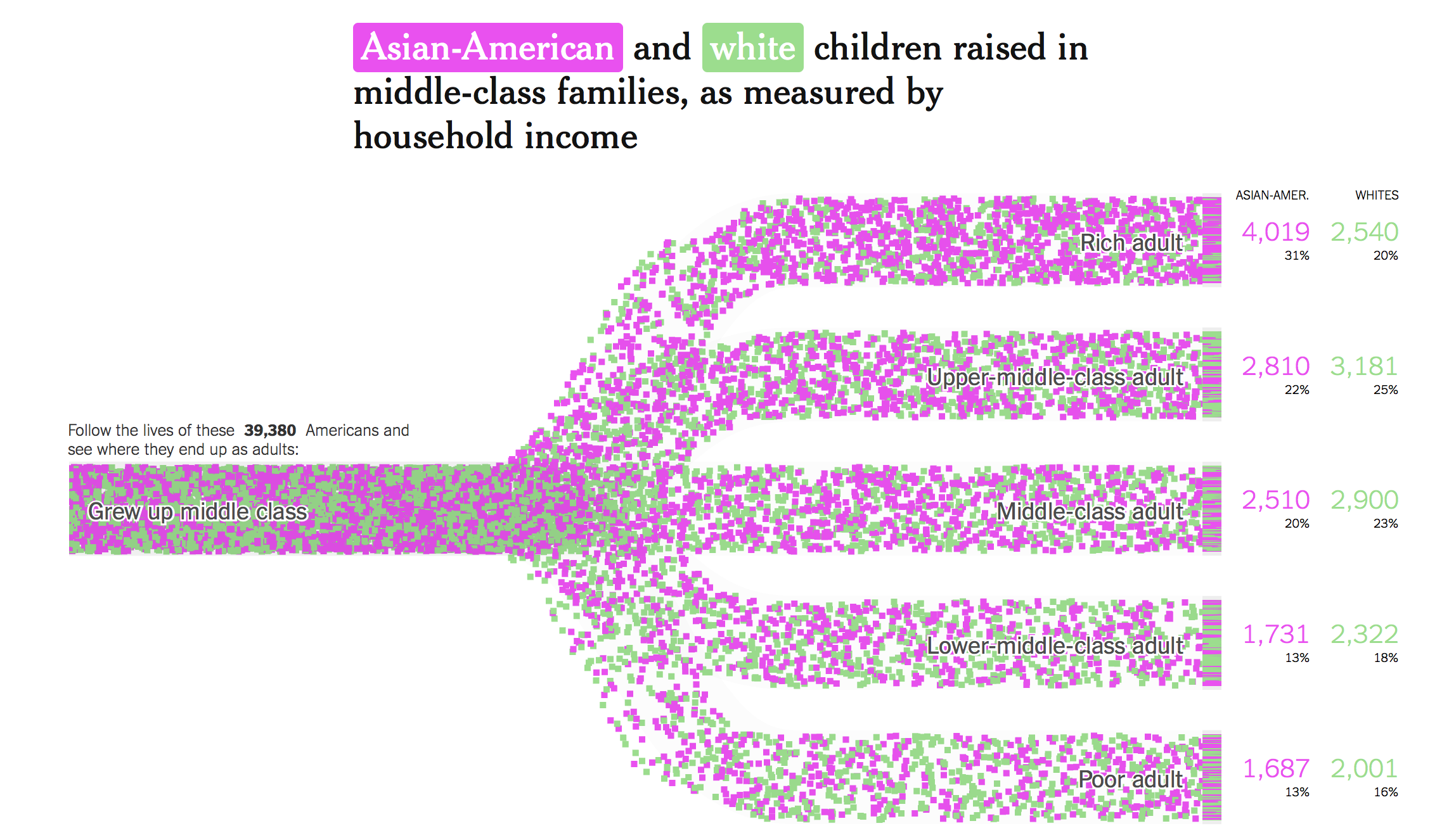

Data visualization refers to the conversion of data sources into visual representations. Visualizations can range from simple tables and graphs, to more complex infographics and interactive media. Good data visualizations allow for the communicatation of assessment results in a way that is more intuitive and more compelling than traditional mediums. By making it easier for stakeholders to glean important information from the data, we increase the chances that results will be discussed and used.

-

- Learn about the Do’s and Don’ts for Charts and Graphs

- Or play with some fun interactive visualizations on income mobility and income inequality:

Use of Results

We typically draw one of three conclusions about a program based on assessment results: the program is effective, the program is conditionally effective, or the program is ineffective. In order to draw these conclusions, we need quality instruments, a solid data collection design, and evidence that the program was implemented as planned.

Assuming all of these conditions have been met and we are able to draw accurate conclusions about program effectiveness, our conclusions will determine how we use assessment results. For example, if we conclude that a program is ineffective we should investigate all possible causes (e.g., low implementation fidelity, theory failure, insufficient programming) before devising a plan of action.

Program is Effective

- Report and share results

- Consider publishing results

- Expand program; apply for additional funding

- Continue to assess program outcomes and monitor quality of implementation

Program is Conditionally Effective

- Using implementation fidelity data, focus groups, and targeted survey questions, investigate for whom and under what conditions the program is effective

- Change/add to the program based on theory

- Keep the existing program and create supplementary/additional programming targeted to specific sub-populations

Program is Ineffective

Implementation Fidelity Issues

Was the program not implemented as planned or with high quality?

-

- Provide better training for facilitators

- Adjust the program schedule to ensure there is time to cover all topics (or remove less important topics)

- Add breaks/activities so students stay engaged

- Create an implementation fidelity checklist and have facilitators rate themselves (so they are more likely to adhere to the program curriculum)

Program Theory Failure

Were the activities/interventions misaligned with theory? Was the theory itself wrong-minded?

-

- Choose a more plausible theory on which to base the program

- Choose different activities/interventions that better align with the theory

Insufficient Program Length/Strength

Was the program, while fundamentally correct in its approach, insufficient in length or strength?

-

- Request additional resources to lengthen the program

- Add additional interventions aligned with theory

- Narrow the focus of program to be more realistic for a smaller intervention

To learn more about use of results for program-related decisions, check out this brief video:

(if the video does not load (e.g. you see a white screen) click here)Learning Improvement

The primary purpose of assessment is to use the results to make programmatic changes that improve student learning/development. This learning improvement process requires practitioners to

-

- meaningfully assess student learning/development,

- effectively intervene via theory-based programming, and

- re-assess to verify better learning/development.

This assess, intervene, re-assess model is called the Simple Model for Learning Improvement. For more information about the simple model, click here.

Assess

It is important to collect data before a new intervention has been administered. These data provide a baseline to which future student achivement can be compared. Without this comparison, we cannot say that any changes in students' knowledge/skills/behaviors are due to the program.

Intervene

The most important part of the simple model is to administer a theory-based intervention. This step is often overlooked in the rush to collect and analyze data, but it's important to remember: garbage in, garbage out. The assessment of weak programs with no theoretical/empirical support rarely provides information that can be readily used for improvement. Thus, just as much time should be devoted to developing an intervention as is spent assessing its impact.

Re-Assess

A quick look at the assessment cycle above shows "use of results" as the last step. Understandably, then, this is where practitioners often stop—they collect, analyze, and interpret their data, then make logical changes to their programs accordingly. But this misses a crucial step. Unless practitioners re-assess after implementing a programmatic change, they cannot say that this change to the program was an improvement. In other words, assessment work is not complete until we collect evidence that a programmatic change actually improved student learning/development.

For more information about learning improvement at JMU, click here.

Additional Resources

Video: Using Assessment Results

Article: A Simple Model for Learning Improvement: Weigh Pig, Feed Pig, Weigh Pig (Fulcher, Good, Coleman, & Smith, 2014)